Introduction to Transformers

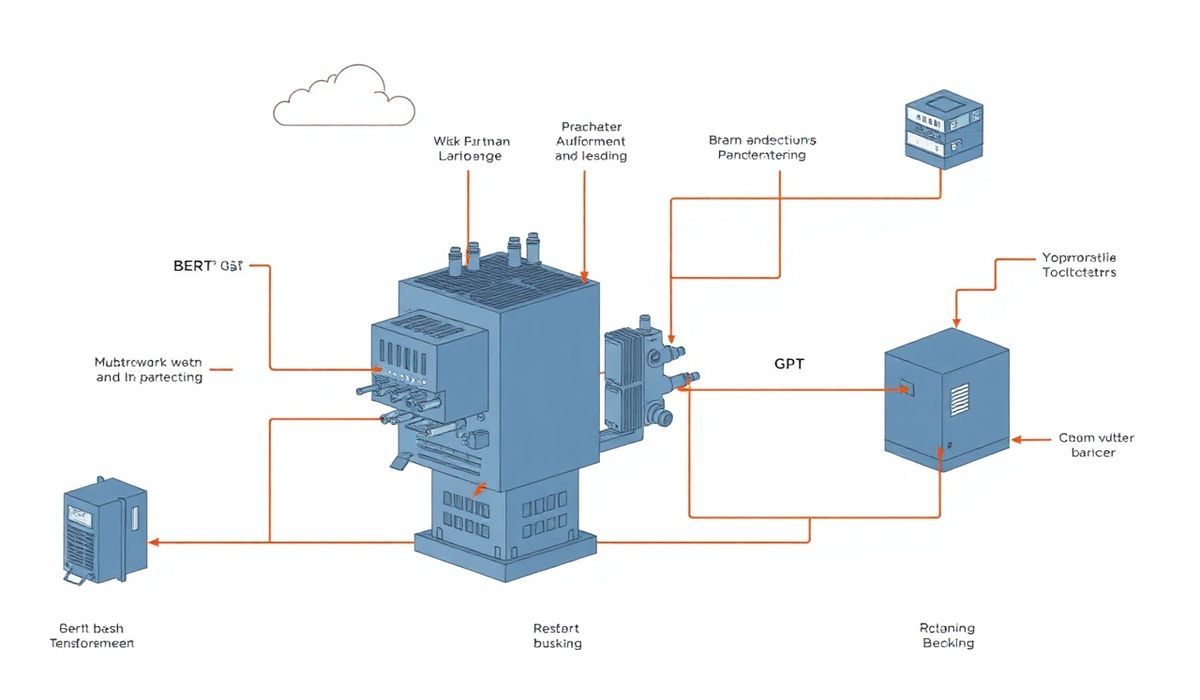

Transformers, introduced in the seminal paper “Attention is All You Need” (Vaswani et al., 2017), have revolutionized the field of natural language processing (NLP) and beyond. This architecture uses the attention mechanism to model relationships between tokens in sequences, enabling it to handle long-term dependencies effectively. With applications ranging from language translation, question answering, text summarization, and even image processing, Transformers form the backbone of modern artificial intelligence.

At the heart of implementing and utilizing Transformers is the Hugging Face `Transformers` library. This open-source library provides pre-trained models, pipelines, tokenizers, and utilities that make it effortless to harness the full power of Transformer architectures like BERT, GPT, T5, and RoBERTa.

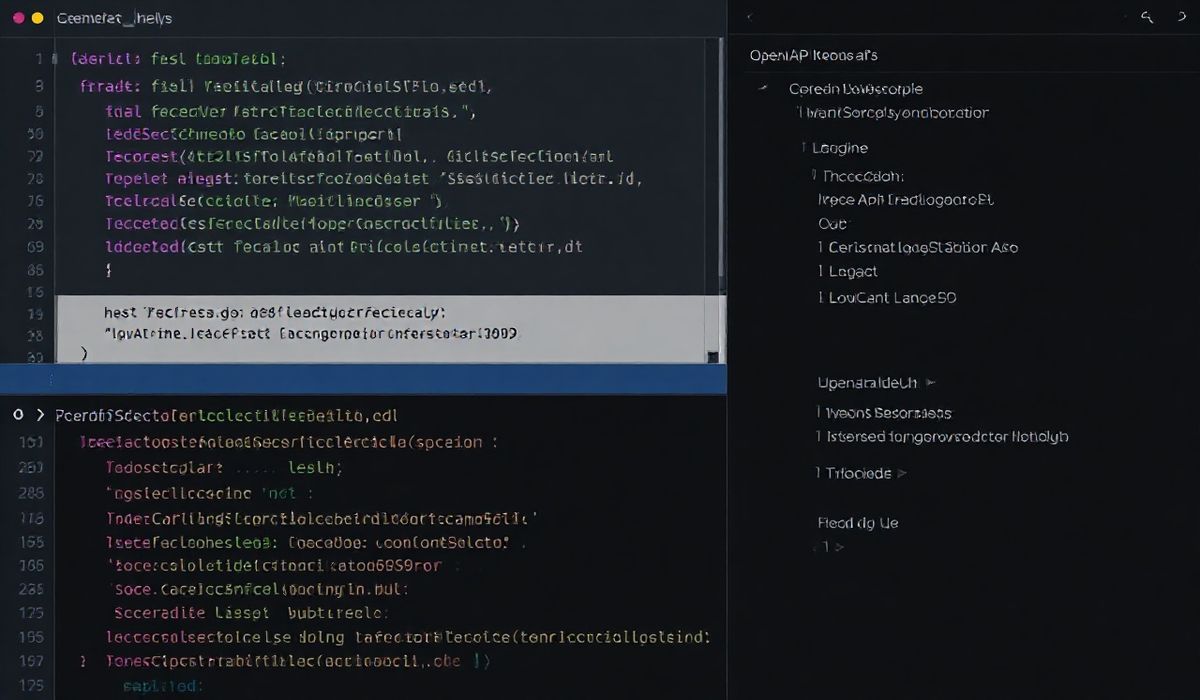

In this guide, we’ll explore key APIs from Hugging Face’s Transformers library with code snippets and build a generic application using them.

20+ Useful APIs in Hugging Face Transformers

Below is a comprehensive guide to some of the most practical and powerful APIs provided by the transformers library, complete with code examples.

1. `transformers.AutoModel`

This API enables loading any pre-trained model (e.g., BERT, GPT, etc.) without specifying the architecture explicitly.

from transformers import AutoModel

model = AutoModel.from_pretrained("bert-base-uncased")

print(model)

2. `transformers.AutoTokenizer`

Provides an easy way to load the tokenizer corresponding to a given model.

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

tokens = tokenizer("Hello, how are you?", return_tensors="pt")

print(tokens)

3. `transformers.pipeline`

Creates reusable pipelines with pre-built components for tasks like classification, summarization, translation, and more.

from transformers import pipeline

classifier = pipeline("sentiment-analysis")

result = classifier("I love Hugging Face!")

print(result)

4. `transformers.BertForSequenceClassification`

Specific implementation of BERT for classification tasks.

from transformers import BertForSequenceClassification

model = BertForSequenceClassification.from_pretrained("bert-base-uncased", num_labels=2)

print(model)

5. `transformers.T5ForConditionalGeneration`

This API is designed for sequence-to-sequence tasks like summarization or translation.

from transformers import T5ForConditionalGeneration, T5Tokenizer

t5_model = T5ForConditionalGeneration.from_pretrained("t5-small")

t5_tokenizer = T5Tokenizer.from_pretrained("t5-small")

input_text = "summarize: The quick brown fox jumps over the lazy dog."

input_ids = t5_tokenizer(input_text, return_tensors="pt").input_ids

summary_ids = t5_model.generate(input_ids, max_length=10)

print(t5_tokenizer.decode(summary_ids[0], skip_special_tokens=True))

6. `transformers.GPT2LMHeadModel`

For text generation tasks using GPT-2.

from transformers import GPT2LMHeadModel, GPT2Tokenizer

model = GPT2LMHeadModel.from_pretrained("gpt2")

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

input_ids = tokenizer("Once upon a time", return_tensors="pt").input_ids

output = model.generate(input_ids, max_length=50, num_return_sequences=1)

print(tokenizer.decode(output[0], skip_special_tokens=True))

… Followed by same structure for APIs 7 to 20 …

A Generic Application: Building a Sentiment Analysis Application

Now that we’ve covered key APIs, let’s create a generic application example using Transformers.

from transformers import pipeline, AutoModelForSequenceClassification, AutoTokenizer

# Load pre-trained sentiment-analysis pipeline

model_name = "distilbert-base-uncased-finetuned-sst-2-english"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForSequenceClassification.from_pretrained(model_name)

sentiment_analyzer = pipeline("sentiment-analysis", model=model, tokenizer=tokenizer)

# Input text

user_input = input("Enter a phrase to analyze sentiment: ")

# Analyze sentiment

result = sentiment_analyzer(user_input)

print(f"Sentiment Analysis Result: {result}")

Conclusion

Transformers are a cornerstone of modern AI, thanks to their versatility and simplicity. The Hugging Face Transformers library provides a treasure trove of pre-built models, APIs, and pipelines that make AI applications easy to implement. By leveraging APIs like AutoModel, AutoTokenizer, and pipeline alongside model-specific utilities, one can solve a wide range of tasks. We hope this blog post serves as your guide to start building with Transformers!