Introduction to Charset Normalizer

When working with diverse datasets or text files in different encodings, developers often encounter the challenge of detecting and handling text encodings. charset-normalizer is a powerful Python library that simplifies this process by providing tools to detect encodings, analyze file content, and convert text to a readable format. This guide introduces the library along with practical API examples and an end-to-end application demonstration.

Why Use Charset Normalizer?

The charset-normalizer library is designed to:

- Automatically detect the encoding of text files or byte data.

- Provide confidence metrics to determine the accuracy of detected encodings.

- Convert text from unknown encodings to Unicode safely and efficiently.

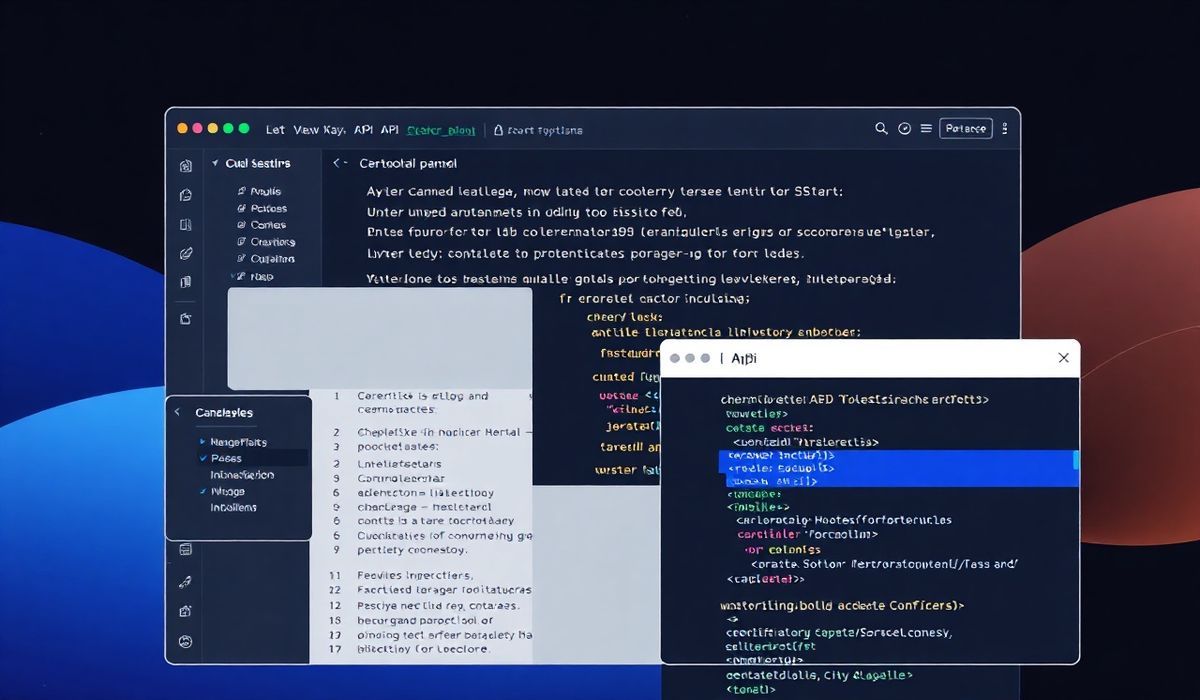

APIs and Usage Examples

Below are some useful APIs and examples from the charset-normalizer library:

1. Detecting Encoding with from_bytes

from charset_normalizer import from_bytes

byte_sequence = b'\xff\xfeH\x00e\x00l\x00l\x00o\x00'

results = from_bytes(byte_sequence)

for result in results:

print(result) # Output encoding details and confidence levels

2. Detecting Encoding of a File with from_path

from charset_normalizer import from_path

file_path = 'example.txt'

results = from_path(file_path)

for result in results:

print(result) # Outputs possible encodings and confidence percentages

3. Converting Encoded Text to Unicode

from charset_normalizer import from_bytes

byte_sequence = b'\xe4\xbd\xa0\xe5\xa5\xbd' # Some non-UTF-8 encoded data

result = from_bytes(byte_sequence).best()

if result:

print(result.text) # Decoded text

4. Using the best() Method for Optimal Result

The best() method returns the most probable encoding result with the highest confidence. This is particularly useful when dealing with ambiguous encodings.

from charset_normalizer import from_bytes

byte_data = b'Bonjour tout le monde'

best_result = from_bytes(byte_data).best()

if best_result:

print(f"Detected Encoding: {best_result.encoding}")

print(f"Decoded Text: {best_result.text}")

5. Generating Confidence Reports

from charset_normalizer import from_path

file_path = 'test_document.txt'

results = from_path(file_path)

for result in results:

print(f"Encoding: {result.encoding}")

print(f"Confidence: {result.percent_chaos}% chaos, {result.percent_coherence}% coherence")

6. Handling Large Files with detect

For large files, use the detect API to parse the content in chunks while efficiently detecting encoding.

from charset_normalizer import detect file_path = 'large_document.txt' result = detect(file_path) print(result) # A dictionary with detailed encoding information

Example Application: Text File Decoder

Here is an example Python application that leverages charset-normalizer to detect and convert the encoding of text files into Unicode:

from charset_normalizer import from_path

def decode_text_file(file_path):

results = from_path(file_path)

best_result = results.best()

if best_result:

print(f"Detected encoding: {best_result.encoding}")

print(f"Decoded content: {best_result.text}")

else:

print("Encoding could not be reliably detected.")

if __name__ == "__main__":

file_to_process = "sample.txt"

decode_text_file(file_to_process)

Conclusion

The charset-normalizer library is a must-have tool for Python developers working with multilingual data or files in unknown encodings. By using its efficient APIs, you can ensure proper encoding detection and text decoding with minimal effort. Start exploring charset-normalizer today and simplify your text processing tasks!