Introduction to Word2Vec

Word2Vec is a powerful natural language processing (NLP) algorithm that has transformed how we represent and process text data for machine learning applications. Introduced by Google in 2013, it is a group of models that are used to generate vector embeddings (numerical vector representations) for words in a way that preserves their meaning and semantic relationships. It creates dense, continuous vector representations where words with similar meanings are mapped to similar locations in a vector space.

The key insight of Word2Vec is that “a word is known by the company it keeps” — meaning a word’s context determines its meaning. Word2Vec utilizes two model architectures to achieve this:

- Continuous Bag of Words (CBOW): Predicts the target word from its surrounding context words.

- Skip-gram: Predicts the context words from a given target word.

Such embeddings can be used across a wide range of applications including sentiment analysis, document similarity, machine translation, question answering, and much more.

Behind the scenes, Word2Vec uses shallow neural networks to learn these vector representations. Libraries such as Gensim in Python make implementing Word2Vec extremely simple and robust.

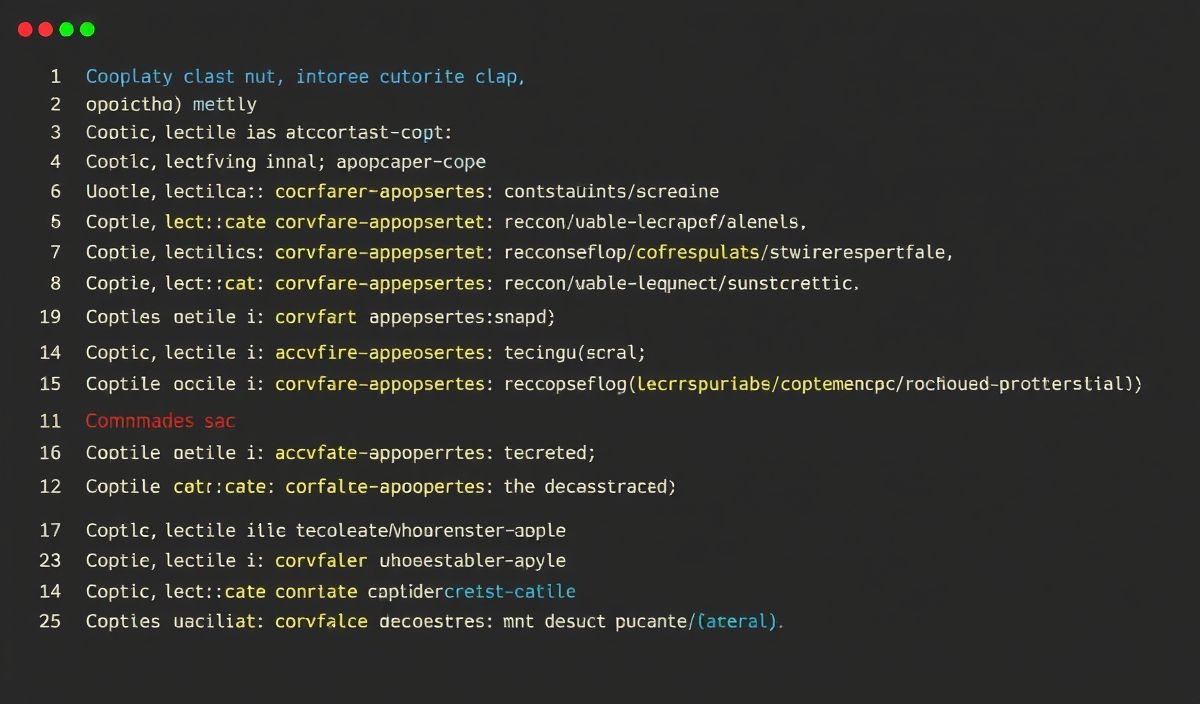

20+ Useful Word2Vec API Explanations and Code Snippets

Below, we’ll go through the most commonly used Word2Vec APIs and methods using Python’s Gensim library (one of the most popular libraries for working with Word2Vec).

To get started, install Gensim:

pip install gensim

1. Loading Pre-trained Word2Vec Embeddings

You can either train a custom Word2Vec model or use pre-trained embeddings like Google’s Word2Vec embeddings.

from gensim.models import KeyedVectors

# Load pre-trained Word2Vec vectors (GoogleNews Vectors)

# Download GoogleNews-vectors-negative300.bin from https://github.com/mmihaltz/word2vec-GoogleNews-vectors

word2vec = KeyedVectors.load_word2vec_format('GoogleNews-vectors-negative300.bin', binary=True)

# Check the vector for a specific word

print(word2vec['apple']) # 300-dimensional vector

2. Train a Custom Word2Vec Model

You can train your own model using a corpus of your choice.

from gensim.models import Word2Vec

# Example of a tokenized text corpus

corpus = [['I', 'love', 'programming'],

['Python', 'is', 'great', 'for', 'NLP'],

['Word2Vec', 'makes', 'text', 'processing', 'easy']]

# Training the Word2Vec model (CBOW: sg=0, Skip-gram: sg=1)

model = Word2Vec(sentences=corpus, vector_size=100, window=5, min_count=1, sg=0)

# Save and Load Model

model.save('word2vec.model')

model = Word2Vec.load('word2vec.model')

3. Getting Word Embeddings

You can get the vector representation of any word.

vector = model.wv['programming'] print(vector) # 100-dimensional vector

4. Check Similarity Between Words

Use the similarity method to find the cosine similarity between two words.

similarity = model.wv.similarity('love', 'like')

print(f"Similarity between 'love' and 'like': {similarity}")

5. Find Most Similar Words

Find the top N words that are most similar to a given word.

similar_words = model.wv.most_similar('programming', topn=5)

print(similar_words)

6. Finding the Odd Word Out

Given a list of words, find the word that doesn’t fit in the context.

odd_word = model.wv.doesnt_match(['dog', 'cat', 'car', 'apple'])

print(f"The odd word is: {odd_word}")

7. Word Analogy Tasks

Solve analogy tasks like King - Man + Woman = ?.

result = model.wv.most_similar(positive=['king', 'woman'], negative=['man']) print(result) # ['queen', score]

8. Access the Vocabulary

Retrieve all words in the model’s vocabulary.

vocabulary = list(model.wv.index_to_key)

print(f"Total words in vocabulary: {len(vocabulary)}")

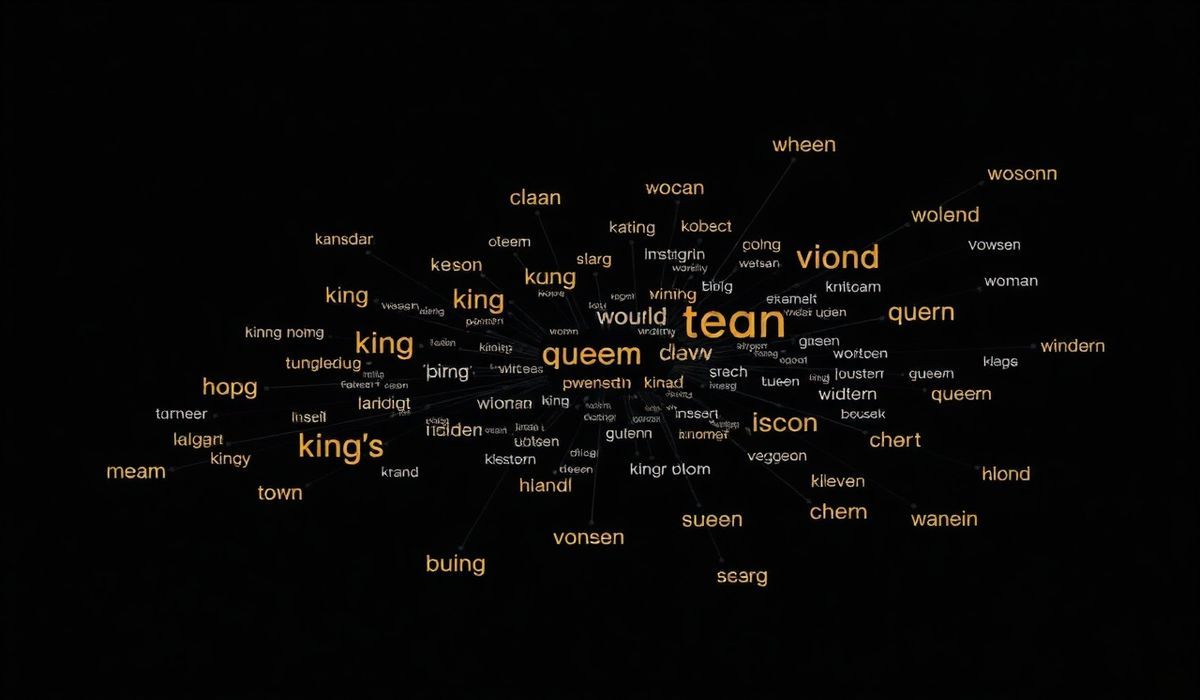

9. Visualizing Embeddings

You can reduce the dimensions of word vectors for visualization using t-SNE.

from sklearn.manifold import TSNE

import matplotlib.pyplot as plt

# Select a few random words and their corresponding vectors

words = ['king', 'queen', 'man', 'woman', 'apple', 'orange']

vectors = [model.wv[word] for word in words]

# Reduce dimensions with t-SNE

tsne = TSNE(n_components=2, random_state=42)

reduced_vectors = tsne.fit_transform(vectors)

# Plotting

plt.figure(figsize=(6, 6))

for i, word in enumerate(words):

plt.scatter(reduced_vectors[i, 0], reduced_vectors[i, 1])

plt.text(reduced_vectors[i, 0] + 0.01, reduced_vectors[i, 1] + 0.01, word, fontsize=12)

plt.show()

10. Restrict Vocabulary

Restrict the vocabulary size to retain only the top N frequent words.

model = Word2Vec(sentences=corpus, vector_size=100, max_final_vocab=5000)

11. Save Word Embeddings as KeyedVectors

Sometimes, you only need the word vectors, not the full Word2Vec model.

model.wv.save("vectors.kv")

kv_model = KeyedVectors.load("vectors.kv")

…

Conclusion

Word2Vec is an exceptionally versatile tool that has revolutionized text data processing. With APIs for training, querying, and evaluating word embeddings, as well as pre-trained models for real-world tasks, Word2Vec simplifies working with textual and semantic relationships. Combine it with advanced visualization tools, document similarity, or real-world NLP tasks, and you have a powerful framework to tackle complex challenges in machine learning and artificial intelligence.