Introduction to Charset Normalizer

In the modern digital world, handling diverse character encodings efficiently is critical for seamless software functionality. The charset-normalizer library in Python is a powerful tool for interpreting and repairing non-standard character encodings, making data processing pipelines more robust. This blog explores the impressive features of charset-normalizer, providing examples of its APIs to help you integrate it into your projects effectively.

What is Charset Normalizer?

charset-normalizer is a Python library designed to detect and normalize text encodings. It is a popular alternative to the chardet library, offering advanced features like better accuracy, results explanations, and compatibility with Python 3 environments.

Key Features of Charset Normalizer

- High accuracy in detecting character encodings.

- Automatic normalization of text to a user-specified encoding.

- Easy-to-use APIs suitable for beginners and experienced developers alike.

- Comprehensive documentation and strong compatibility with Python 3.

Popular APIs Explained with Code Snippets

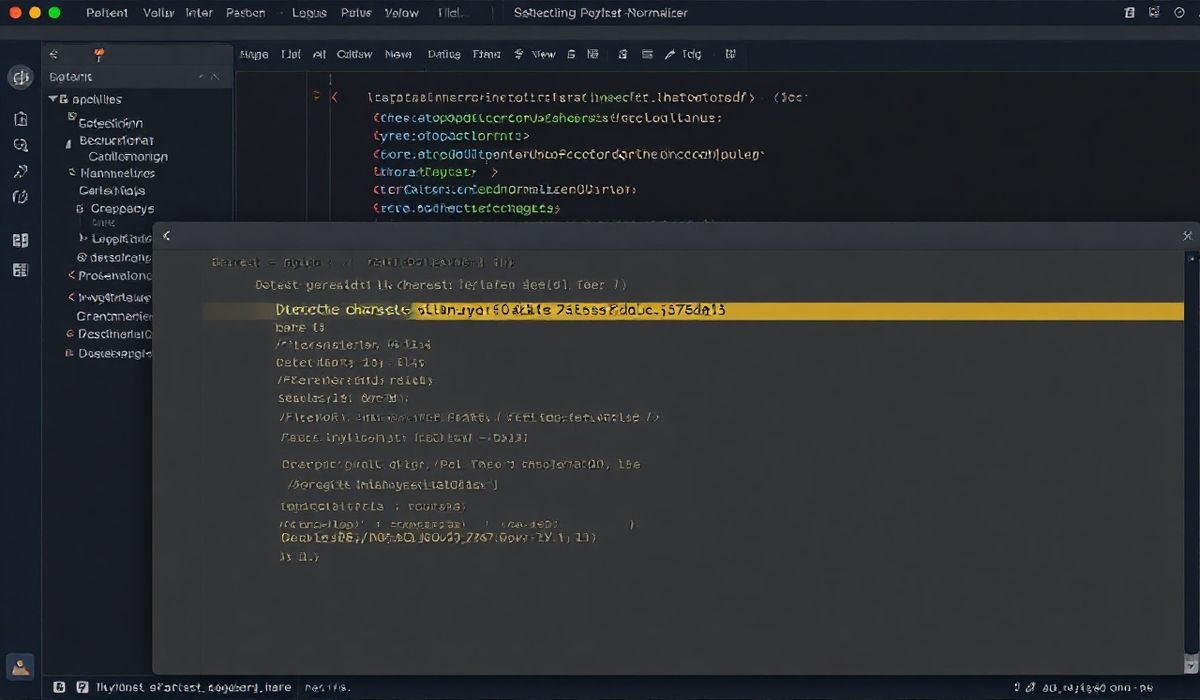

1. Detect Character Encoding

Detect the encoding of a given text file using the from_path method.

from charset_normalizer import from_path

results = from_path('example.txt')

for result in results:

print(f"Detected Encoding: {result.encoding}")

print(f"Confidence: {result.encoding_confidence}")

2. Parse and Normalize Text

The from_bytes method allows you to work directly with byte data, parsing and normalizing it to your desired encoding.

from charset_normalizer import from_bytes

byte_content = b'\xe5\xad\xa6\xe7\x94\x9f'

results = from_bytes(byte_content)

for result in results:

print(f"Normalized String: {result.string}")

3. Save Normalized Content

Save your normalized content back to a file with ease.

from charset_normalizer import from_path

results = from_path('example.txt')

for result in results:

with open('normalized_example.txt', 'w', encoding='utf-8') as f:

f.write(result.string)

4. Checking CLI Usage

Charset Normalizer comes with CLI support, enabling quick checks on text files.

# Type this in the terminal charset_normalizer --file example.txt

A Practical App Example

Below is an example of a Python script that uses charset-normalizer to process multiple text files in a directory. The script detects encodings, normalizes them, and saves them in a uniform format.

import os

from charset_normalizer import from_path

directory = "text_files"

output_dir = "normalized_texts"

if not os.path.exists(output_dir):

os.makedirs(output_dir)

for filename in os.listdir(directory):

if filename.endswith(".txt"):

file_path = os.path.join(directory, filename)

results = from_path(file_path)

for result in results:

normalized_file_path = os.path.join(output_dir, filename)

with open(normalized_file_path, 'w', encoding='utf-8') as f:

f.write(result.string)

By implementing this tool, developers can ensure all text files in a project adhere to a standard encoding, preventing errors during data processing and analysis.

Conclusion

charset-normalizer is a highly efficient and robust library that simplifies handling diverse text encodings. From detecting character sets to normalizing text data, this library offers a range of capabilities suitable for both small-scale applications and enterprise-level workflows. By following the examples in this blog, you can seamlessly integrate charset-normalizer into your Python projects.